Science of Information

- 8 minsA brief explanation on how my curiosity lead me here.

I needed a recap on math to get sharp at data structures and algorithms, math visualization offered plenty aha moments, found difficulty keeping focused on statistics. I found a treasure trove of wonder in an Information science course, that had a lot of answers to how we measure the transfer of knowledge and the mechanics of communication.

Here are some notes so far…

Study from Science of Information Technology: From Language to Black Holes @ Great Courses

How Computing Began

- Babbage and the Differential Analyzer

- Ada Lovelace - 1815-1852 - first computer programmer

- Vannevar Bush 1931 - recreates Babbage’s Differential Analyzer

- Claude Shannon - Builds computer out of logic gates from Boolean Logic.

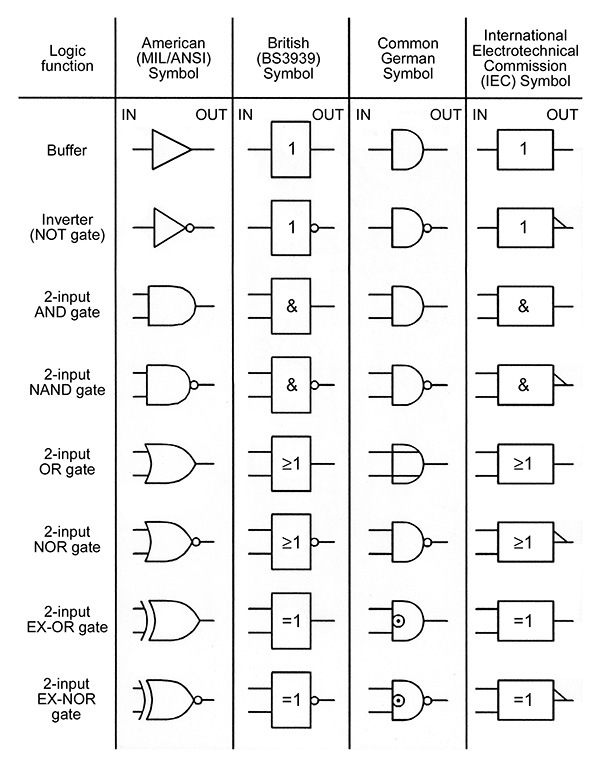

Logic Gate Symbols from nutvolts.com Logic Gates

Logic Gate Symbols from nutvolts.com Logic Gates

- from Boolean Logic & Electric Circuits -> 2 light switches 1 | 0

- John Napier - Logarithm Rules LOG2 (base algorithm)

MAXWELL’S DEMON

- Natural Log

- Information Entropy vs. Entropy in thermodynamics

- Demon’s Info is form of Entropy (Zurek)

Sorting Demon - trap door to a box with heat in motion.

Hartley - Shannon Entropy

- ENTROPY H - minimum # of bits required to represent the source.

Probability (17th Century)

RULES: | (0 = Impossible; 1 = Certain)

- For Any X :

X, 0 ≤ P(X) ≤ 1

0 - 1 = P(X) - E (sum of all) P(X) = 1

_ E T A O I N S H R D L U (common english letters)

- “Letter Probabilities” 19.3%

- Surprise of a message

- “Pre-fix Free Code Word” - no code word can be the 1st part of any other code word.

- Average Code Word >= Entropy of Information Source

- “HUFFMAN CODE” - Best pre-fix free code

SHANNON’S FIRST FUNDAMENTAL THEOREM

- Information Inequality relates to entropy to the number of bits to represent messages.

- Entropy H(X) of a source (the Average Surprise) equals the main average number of bits necessary to code messages from that source.

DATA COMPRESSION

- 1970s GZIP (Israel) - First Data Compression - Analyzes Text & Retruns Compressed File.

Images & Sound Encoding = Runline Encoding (through combination of frequencies) -

1990s JPEG, MPEG, PNG - Joint Photographic Experts Group, Moving Pictures Experts Group, Portable Network Graphics.

- 8x8 pixel block via Huffman Code

- Video - 1 Frame = 2,000,000 Pixels - 3 bytes of color per pixel

- 180 Million Bites of info per sec. (US - 30FPS / UK 25FPS)

- Records difference from “P-Frame / Key-frame” - LOSSLESS Compression

Shannon’s Second Fundamental Theorem:

- “Communication Channel” (Input / Output)

- Defeat Noise

- As long as a channel capacity is not exceeded, then you dont have to communicate slower to be rid of errors.

Richard Hamming - “Hamming Distance” (N, K values)

- Distance from one code to another.

ENCODE : Hamming | Channel : 7, 4 | Error Correction : Binary Code | Decode : 1 Bit of Memory (Err Probability 10-14)

1 radiation induced error per year!

Timeline of Computing Technology

- 1725: Weaving Looms

- 1804: Jacquard Loom - Charles Babbage - Jules Carpentier’s Musical Melotrope

- 1890: Herman Hollerith Data Processing - Punch Cards - US census - Tabulating Company (IBM)

- 1954: Fortran IBM - John Backus | “IF / ELSE” - fortran statement

- 8 BITS = 256 different combinations = BYTE

- Binary -> Compiler -> Language - 1955: Grace Hopper (US Admiral) COBOL - Common Business Oriented Language

- named after Blaise Pascal. “good tracking tool” - 1960: ASCII - American Standards Association creates ANSI (American National Standards Institute)

which developed ASCII - American Standard Code for Information Interchange (character to binary) -

1969: Unix, Linux, Macos - Hacker hats (white: good black: bad) - 1973: C Foundation

- 2001: Lockheed Martin - F35 Stealth Fighter Jet

- “M-LOCKS” - 8 million lines of code in C++ - 2014: Swift / OBJ-C

- TODAY = 700 Languages - GE powers turbines and highways

- Robotics - CNC “Computer Numerically Controlled Lathes” (Milling machines)

- Quantum Computing - manipulating state of electrons to process calculations faster.

BUILDING BLOCKS

- System Programming - OS, C++, Assembly language

- Architectural Programming - Frameworks, Java, Microsoft.Net

- Application Programming - PHP, Ruby, Python, Web Browser

- Data Manipulation - My SQL, NoSQL, MongoDB

Timeline of Information Theory

- 1676 Order & Disorder - philosophy of engineering - heat - steam engines - “Napoleonic Wars”

- 1824 “Reflections on the motive power of fire” by Nicholas Carnot

- Hot -> Cold = change in temp = energy

(dies of cholera and papers are burned) - Rudolph Calcius - Laws of thermodynamics

- entropy = irreversible (meanwhile victorian scientists like Ernest Mach reject atomic theory) - 1844- 1906 Ludwig Boltzman - hot atoms move rapidly

- ENTROPY = measures disorder of things

- 1831 - 1879 JAMES CLERK MAXWELL “MAXWELLS DEMON”

- 1948 Claude Shannon BELL labs - mathematical theory

- bit = atom = 2 states - 1952 Turing - Morphogenisis, Embryo - clumps cells & form skin, self, organization - describes a living process.

- Found the same natural process in chemistry of nature with Boris Belusov’s Human Sugar Consumption. - Bletchley Park WWII codebreaker More on Alan Turing…

- 1960s Newtonian Physics - Precise predictions. Chaos Theory to predict weather - Edward Lorenz (meteorologist)

- 1950s IBM, Benoit, Mandelbrot set, “Thumbprint of God”

- Turings Patterns - Belusov’s Principles - Mandelbrot Fractals

REED SOLOMON CODE

Encode -> Interleave -> Channel -> De-Interleave -> Error Correction -> Decode

Laser Diode scans Polycarbonate Transparent Plastic - Labeled Surface

1/2 Micron wide - Data Layer / "pits"

Dust = Errors - Super Decoder

SCHROEDER - "Perceptual Coding"

- SINEWAVE - spatial frequencies (fourier transform for images)

Image By Cmglee - Own work, CC BY-SA 3.0

SUPER CODER & SUPER CHANNEL

-

OUTER CODER Reed Solomon 28,24 -

InterCoder Reed Solomon 32, 28 - Raw Channel

-

Inner Decoder -

Outer Decoder

Radial errors are easy to correct, CD = corrects 35,000 errors in 1 track

NASA using lossless compression adds Reed-Solomon code

- Voyager - Mission to uranus and neptune - more precise data transactions due to error corrections.

FAR REACHING SIGNALS & BANDWITH

DIGITAL:

-> Information in a finite number of discrete alternatives

-> Boolean Logic Gates

-> Logic Gates

ANALOG:

-> Radio / Sound

-> Differential Engine

-> Continous Variables

-> Time & Velocity

-> Amplitude & Modulation

-> Frequency

Joseph Fourier - Fourier Transform

- Any signal can be regarded as a mixture of simple sinewaves of different frequencies.

Fourier Carrier AM/FM Waves - image from wikipedia | Bandwith- image from wikipedia

Nyquist Shannon Sampling Theorem

CRYPTOGRAPHY

- Kerckhoff’s Principle -> lock has 6 tumblr pins - 5-10 mil, 8 possible lengths

- Log2(8) = 3 bits per pin - 6 pins

- Julius Cesar Cipher -

-> Plain Text -> Cipher -> Cipher Text -> Encryption -> Encipherment Msg -> Rule 2 Convert -> Converted Msg -> Transforming 2 plain text (babbage kasiski method)

CRYPTANALYSIS - Frequency Analysis

- TURING’S BOMBE - enigma - Arthur Zimmerman - Linear B (Alice Kobel, Micheal Ventris)

- Shannon - Communication theory of secrecy systems. - Entropy.

- Redundancy (D) D = Hc - Hp - John Hadwick - info gained = # of letters X Bits / Letter

- information basis for cryptanalysis - Speech Encipherment - SIG SALLY system - pulse code modulation - “Calculation Complexity”

- Neumann - Computer & the Brain - Entropy - shannon & neumann

- TURING’S Universal Machine

- Algorithmic Information - k2k(m) - algorithmic entropy -> for any particular string of bits, h= ave(k) (Shannon Entropy)